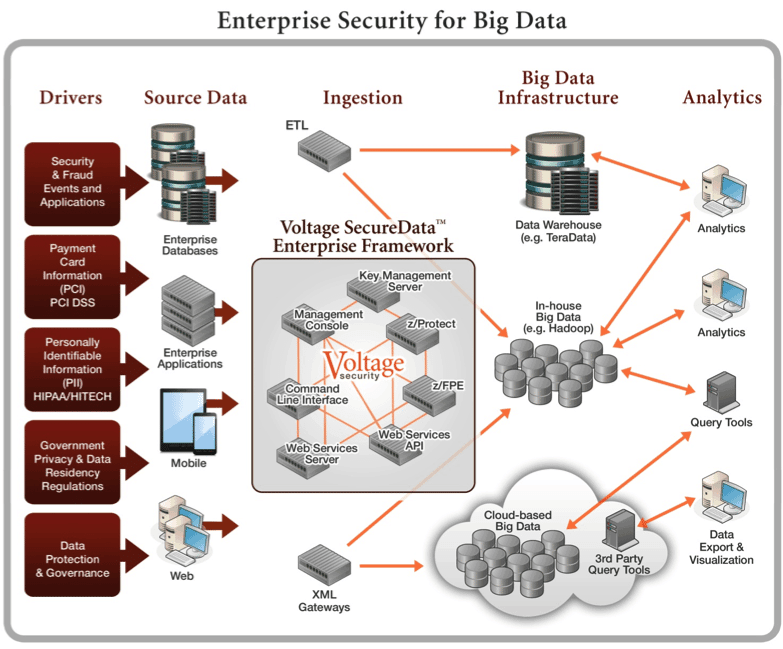

Whether only beginning or well underway with Big Data initiatives, organizations need data protection to mitigate risk of breach, assure global regulatory compliance and deliver the performance and scale to adapt to the fast-changing ecosystem of Apache Hadoop tools and technology.

Business insights from big data analytics promise major benefits to enterprises – but launch of these initiatives also presents potential risks. New architectures, including Hadoop, can aggregate different types of data in structured, semi-structured and unstructured forms, perform parallel computations on large datasets, and continuously feed the data lake that enable data scientists to see patterns and trends. Because companies can now cost-effectively hold massive amounts of data for analysis, potentially representing years of information, these new systems can increase the risk and magnitude of a data breach.

Sensitive data can be exposed, and thus violate compliance and data security regulations. Aggregating data across borders can break data residency laws. So, finding ways to effectively secure sensitive data, yet enable analytics for meaningful insights, can be an obstacle to implementing big data capabilities broadly throughout the organization.

Hortonworks partner Voltage Security offers data protection solutions that protect data from any source in any format, before it enters Hadoop. Using Voltage Format-Preserving Encryption™ (FPE), structured, semi-structured or unstructured data can be encrypted at source and protected throughout the data life cycle, wherever it resides and however it is used. Protection travels with the data, eliminating security gaps in transmission into and out of Hadoop and other environments. FPE enables data de-identification to provide access to sensitive data while maintaining privacy and confidentiality for certain data fields such as social security numbers that need a degree of privacy while remaining in a format useful for analytics. Unlike other solutions, encryption is not encrypting at the Hadoop distributed file system (HDFS) layer, rather protecting only the fields that need protecting. This solution can:

Hortonworks partner Voltage Security offers data protection solutions that protect data from any source in any format, before it enters Hadoop. Using Voltage Format-Preserving Encryption™ (FPE), structured, semi-structured or unstructured data can be encrypted at source and protected throughout the data life cycle, wherever it resides and however it is used. Protection travels with the data, eliminating security gaps in transmission into and out of Hadoop and other environments. FPE enables data de-identification to provide access to sensitive data while maintaining privacy and confidentiality for certain data fields such as social security numbers that need a degree of privacy while remaining in a format useful for analytics. Unlike other solutions, encryption is not encrypting at the Hadoop distributed file system (HDFS) layer, rather protecting only the fields that need protecting. This solution can:

- Protect data aggregated on its way into Hadoop, or selectively inside MapReduce jobs

- Preserve the format so protected data has an appearance that matches the original data (e.g. credit card, Social Security Number, national ID formats) – a vital characteristic for analysis to operate properly

- Provide reversibility, so original data can be securely retrieved as business policy dictates

- Deliver standards-based, patented and NIST-recognized security, with published security proofs

Hadoop security in the Healthcare Industry

A current application of this is an agency of the U.S. government, using a big data facility secured by Voltage SecureData to share 100 terabytes of patient healthcare information. Independent R&D institutes across the country access and analyze the data for emerging risks and patterns. All data is de-identified at the field level before release in full HIPAA/HITECH compliance. When researchers identify health risks that may impact a population with living members represented in the data, the agency is able to quickly and securely re-identify and contact the affected individuals for proactive treatment.

So how does this affect performance?

Many applications function with FPE-encrypted or tokenized data, with no need for decryption. This is due to the preservation of format and referential integrity, and the ability to leave selected characters “in the clear” so the data can be used but not compromised. For example, purchase behavior could be analyzed by month and year, with obfuscation of the particular day and time records for each purchase (2011:10:AD 1Y:33:9B). Compute-intensive applications usually perform their analytics on protected data, and then decrypt the final result set only if needed. Data can be FPE-encrypted at the source then simply imported into Hadoop, and used without re-encryption. This is extremely efficient and decreases the ingestion time to get protected data into Hadoop because there is no need for an encryption step at that point.

Learn more

Register for the webinar with Voltage Security and Hortonworks: Thursday August 1, 2013 at 10am PT Don’t Let Data Security be the ‘Elephant in the Room’!

Learn more about Apache Knox, the community-driven effort to secure Hadoop.

The post Securing Enterprise Data in Hadoop appeared first on Hortonworks.